Technological Singularity

Technological Singularity is upon us, it already started, the cat is out of the bag and has given birth to kittens. We are living this tech in our pockets: 2007 Nobel Prize in Physics.

Watch Blood and Machines at TED by Ray Kurzweil.

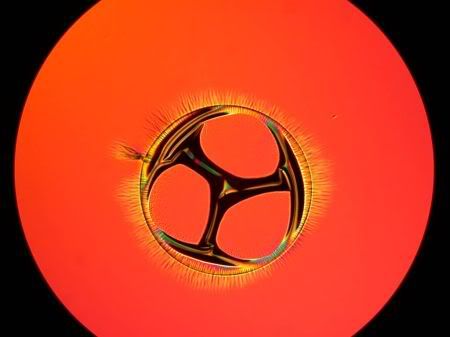

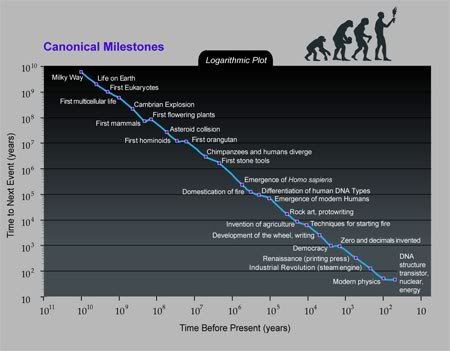

GRAPH 1; GRAPH 2; GRAPH 3; GRAPH 4

The Singularity is all about this logarithm line… it’s a countdown:

(it’s not precise in any way, I'll just gives an idea of what is it -- I know, the Universe has 13 bi years and here is 16 bi, sorry about that)

10000000000 (10 billion) years between the Big Bang and the formation of our planetary system, then

5000000000 (5 billions) years later life arises on earth,

1000000000 (1 billion) years from life to the first eukaryotes, then

500000000 (500 million) years for the Cambrian Explosion

100000000 (100 million) years for the first mammal after the Cambrian Explosion

50000000 (50 million) years from the first mammal to a hominoid

10000000 (10 million) years for the first stone tools, then

1000000 (1 million) years came the homo sapiens

500000 (500 thousand) years, homo sapiens started to draw (rock art) and domesticate fire

50000 (50 thousand) years later they learned agriculture, then

10000 (10 thousand) years and they started to write, invented the wheel

5000 years later democracy was created

2000 years later came the print press

300 years later the industrial revolution started

200 years later, Modern Physics born

100 years later came the atom bomb, DNA discovery and computers

50 years later the humanity is connected in a single web

5 years after humans lost to the machines in chess

1 year later self-replication (artificial life?) technologies (GNR: Genetics, Nanotechnology, and Robotics) are the main target of DARPA investments fund

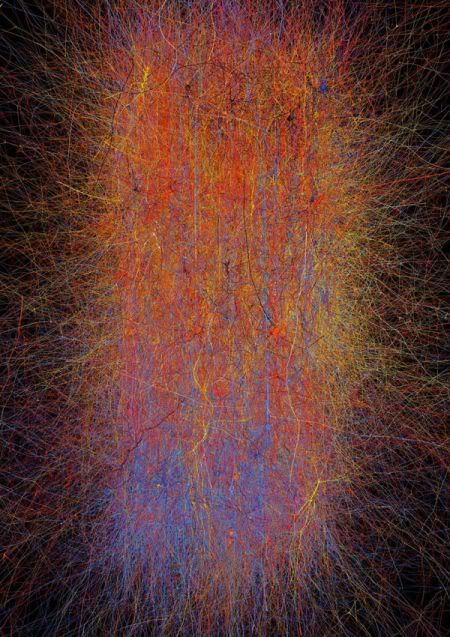

6 months later neurons connections are simulated in a machine

2 months later patents covering intra connections between biological beings and machines are filled (that’s the last paradigm shift that I can say that is paradigm shift)

The paradigms shifts' pace reaches a singularity, near infinite velocity. It's theorical, but has a very strong evidence. Like global warming.

(it’s not precise in any way, I'll just gives an idea of what is it -- I know, the Universe has 13 bi years and here is 16 bi, sorry about that)

10000000000 (10 billion) years between the Big Bang and the formation of our planetary system, then

5000000000 (5 billions) years later life arises on earth,

1000000000 (1 billion) years from life to the first eukaryotes, then

500000000 (500 million) years for the Cambrian Explosion

100000000 (100 million) years for the first mammal after the Cambrian Explosion

50000000 (50 million) years from the first mammal to a hominoid

10000000 (10 million) years for the first stone tools, then

1000000 (1 million) years came the homo sapiens

500000 (500 thousand) years, homo sapiens started to draw (rock art) and domesticate fire

50000 (50 thousand) years later they learned agriculture, then

10000 (10 thousand) years and they started to write, invented the wheel

5000 years later democracy was created

2000 years later came the print press

300 years later the industrial revolution started

200 years later, Modern Physics born

100 years later came the atom bomb, DNA discovery and computers

50 years later the humanity is connected in a single web

5 years after humans lost to the machines in chess

1 year later self-replication (artificial life?) technologies (GNR: Genetics, Nanotechnology, and Robotics) are the main target of DARPA investments fund

6 months later neurons connections are simulated in a machine

2 months later patents covering intra connections between biological beings and machines are filled (that’s the last paradigm shift that I can say that is paradigm shift)

The paradigms shifts' pace reaches a singularity, near infinite velocity. It's theorical, but has a very strong evidence. Like global warming.

and now…

WHY THE FUTURE DOESN'T NEED US

by Bill Joy, Java's father (Sun Microsystems' founder)

(excerpts; Wired 8.04, 2000)

Biological species almost never survive encounters with superior competitors.

The machines might be permitted to make all of their own decisions without human oversight, or else human control over the machines might be retained. What we do suggest is that the human race might easily permit itself to drift into a position of such dependence on the machines that it would have no practical choice but to accept all of the machines' decisions. Eventually a stage may be reached at which the decisions necessary to keep the system running will be so complex that human beings will be incapable of making them intelligently.

People won't be able to just turn the machines off

Human work will no longer be necessary the masses will be superfluous, a useless burden on the system. If the elite is ruthless they may simply decide to exterminate the mass of humanity. If they are humane they may use propaganda or other psychological or biological techniques to reduce the birth rate until the mass of humanity becomes extinct, leaving the world to the elite. Or, if the elite consists of soft-hearted liberals, they may decide to play the role of good shepherds to the rest of the human race.

In a completely free marketplace Robotic industries would compete vigorously among themselves for matter, energy, and space, incidentally driving their price beyond human reach. Unable to afford the necessities of life, biological humans would be squeezed out of existence. Our main job in the centuries to go will be "ensuring continued cooperation from the robot industries" by passing laws decreeing that they be "nice" (Asimov’s 3 laws of robotics), and to describe how seriously dangerous a human can be "once transformed into an unbounded superintelligent robot." Moravec's view is that the robots will eventually succeed us - that humans clearly face extinction.

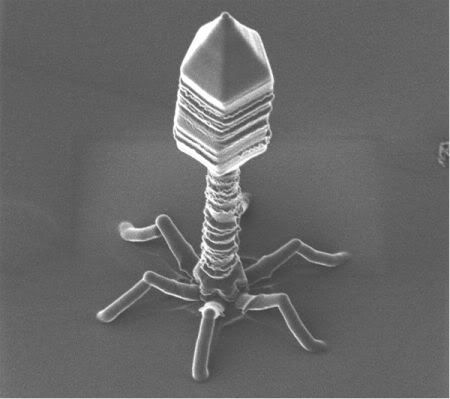

Accustomed to living with almost routine scientific breakthroughs, we have yet to come to terms with the fact that the most compelling 21st-century technologies - robotics, genetic engineering, and nanotechnology - pose a different threat than the technologies that have come before. Specifically, robots, engineered organisms, and nanobots share a dangerous amplifying factor: They can self-replicate. A bomb is blown up only once - but one bot can become many, and quickly get out of control.

Because of the recent rapid and radical progress in molecular electronics - where individual atoms and molecules replace lithographically drawn transistors - and related nanoscale technologies, we should be able to meet or exceed the Moore's law rate of progress for another 30 years. By 2030, we are likely to be able to build machines, in quantity, a million times as powerful as the personal computers of today - sufficient to implement the dreams of Kurzweil and Moravec.

As this enormous computing power is combined with the manipulative advances of the physical sciences and the new, deep understandings in genetics, enormous transformative power is being unleashed. These combinations open up the opportunity to completely redesign the world, for better or worse: The replicating and evolving processes that have been confined to the natural world are about to become realms of human endeavor.

In designing software and microprocessors, I have never had the feeling that I was designing an intelligent machine. The software and hardware is so fragile and the capabilities of the machine to "think" so clearly absent that, even as a possibility, this has always seemed very far in the future.

But now, with the prospect of human-level computing power in about 30 years, a new idea suggests itself: that I may be working to create tools which will enable the construction of the technology that may replace our species. How do I feel about this? Very uncomfortable. Having struggled my entire career to build reliable software systems, it seems to me more than likely that this future will not work out as well as some people may imagine. My personal experience suggests we tend to overestimate our design abilities.

The dream of robotics is, first, that intelligent machines can do our work for us, allowing us lives of leisure, restoring us to Eden. Yet in his history of such ideas, Darwin Among the Machines, George Dyson warns: "In the game of life and evolution there are three players at the table: human beings, nature, and machines. I am firmly on the side of nature. But nature, I suspect, is on the side of the machines." As we have seen, Moravec agrees, believing we may well not survive the encounter with the superior robot species.

How soon could such an intelligent robot be built? The coming advances in computing power seem to make it possible by 2030. And once an intelligent robot exists, it is only a small step to a robot species - to an intelligent robot that can make evolved copies of itself.

A second dream of robotics is that we will gradually replace ourselves with our robotic technology, achieving near immortality by downloading our consciousnesses; it is this process that Danny Hillis thinks we will gradually get used to and that Ray Kurzweil elegantly details inThe Age of Spiritual Machines.

But if we are downloaded into our technology, what are the chances that we will thereafter be ourselves or even human? It seems to me far more likely that a robotic existence would not be like a human one in any sense that we understand, that the robots would in no sense be our children, that on this path our humanity may well be lost.

It is most of all the power of destructive self-replication in genetics, nanotechnology, and robotics (GNR) that should give us pause. Self-replication is the modus operandi of genetic engineering, which uses the machinery of the cell to replicate its designs, and the prime danger underlying gray goo myth in nanotechnology. Stories of run-amok robots like the Borg, replicating or mutating to escape from the ethical constraints imposed on them by their creators, are well established in our science fiction books and movies. It is even possible that self-replication may be more fundamental than we thought, and hence harder - or even impossible - to control.

Artificial Nano T4 Bacteriophage ∇

In November 1945, three months after the atomic bombings, Oppenheimer stood firmly behind the scientific attitude, saying, "It is not possible to be a scientist unless you believe that the knowledge of the world, and the power which this gives, is a thing which is of intrinsic value to humanity, and that you are using it to help in the spread of knowledge and are willing to take the consequences." Two years later, in 1948, Oppenheimer seemed to have reached another stage in his thinking, saying, "In some sort of crude sense which no vulgarity, no humor, no overstatement can quite extinguish, the physicists have known sin; and this is a knowledge they cannot lose."

Nearly 20 years ago, in the documentary The Day After Trinity, Freeman Dyson summarized the scientific attitudes that brought us to the nuclear precipice: "I have felt it myself. The glitter of nuclear weapons. It is irresistible if you come to them as a scientist. To feel it's there in your hands, to release this energy that fuels the stars, to let it do your bidding. To perform these miracles, to lift a million tons of rock into the sky. It is something that gives people an illusion of illimitable power, and it is, in some ways, responsible for all our troubles - this, what you might call technical arrogance, that overcomes people when they see what they can do with their minds."

The 21st-century technologies - genetics, nanotechnology, and robotics (GNR) - are so powerful that they can spawn whole new classes of accidents and abuses. Most dangerously, for the first time, these accidents and abuses are widely within the reach of individuals or small groups. They will not require large facilities or rare raw materials. Knowledge alone will enable the use of them.

Thus we have the possibility not just of weapons of mass destruction but of knowledge-enabled mass destruction (KMD), this destructiveness hugely amplified by the power of self-replication.

I think it is no exaggeration to say we are on the cusp of the further perfection of extreme evil, an evil whose possibility spreads well beyond that which weapons of mass destruction bequeathed to the nation-states, on to a surprising and terrible empowerment of extreme individuals.

The new Pandora's boxes of genetics, nanotechnology, and robotics are almost open, yet we seem hardly to have noticed. Ideas can't be put back in a box. They can be freely copied. Once they are out, they are out. Churchill remarked, in a famous left-handed compliment, that the American people and their leaders "invariably do the right thing, after they have examined every other alternative." In this case, however, we must act more presciently, as to do the right thing only at last may be to lose the chance to do it at all.

As Thoreau said, "We do not ride on the railroad; it rides upon us"; and this is what we must fight, in our time. The question is, indeed, Which is to be master? Will we survive our technologies?

We are being propelled into this new century with no plan, no control, no brakes. Have we already gone too far down the path to alter course? I don't believe so, but we aren't trying yet, and the last chance to assert control - the fail-safe point - is rapidly approaching. We have our first pet robots, as well as commercially available genetic engineering techniques, and our nanoscale techniques are advancing rapidly. While the development of these technologies proceeds through a number of steps, it isn't necessarily the case - as happened in the Manhattan Project and the Trinity test - that the last step in proving a technology is large and hard. The breakthrough to wild self-replication in robotics, genetic engineering, or nanotechnology could come suddenly, reprising the surprise we felt when we learned of the cloning of a mammal.

Neither should we pursue near immortality without considering the costs, without considering the commensurate increase in the risk of extinction. Immortality, while perhaps the original, is certainly not the only possible utopian dream.

"At the dawn of societies, men saw their passage on Earth as nothing more than a labyrinth of pain, at the end of which stood a door leading, via their death, to the company of gods and to Eternity. With the Hebrews and then the Greeks, some men dared free themselves from theological demands and dream of an ideal City where Liberty would flourish. Others, noting the evolution of the market society, understood that the liberty of some would entail the alienation of others, and they sought Equality."

Dalai Lama argues that the most important thing is for us to conduct our lives with love and compassion for others, and that our societies need to develop a stronger notion of universal responsibility and of our interdependency; he proposes a standard of positive ethical conduct for individuals and societies that seems consonant with Attali's Fraternity utopia.

(…)

People who know about the dangers still seem strangely silent. When pressed, they trot out the "this is nothing new" riposte - as if awareness of what could happen is response enough. They tell me, There are universities filled with bioethicists who study this stuff all day long. They say, All this has been written about before, and by experts. They complain, Your worries and your arguments are already old hat.

Do you remember the beautiful penultimate scene in Manhattan where Woody Allen is lying on his couch and talking into a tape recorder? He is writing a short story about people who are creating unnecessary, neurotic problems for themselves, because it keeps them from dealing with more unsolvable, terrifying problems about the universe.

Is required that scientists and engineers adopt a strong code of ethical conduct, resembling the Hippocratic oath, and that they have the courage to whistleblow as necessary, even at high personal cost.

-----------------------------

"From these several considerations I think it inevitably follows, that as new species in the course of time are formed through natural selection, others will become rarer and rarer, and finally extinct. The forms which stand in closest competition with those undergoing modification and improvement, will naturally suffer most. And we have seen in the chapter on the Struggle for Existence that it is the most closely-allied forms, varieties of the same species, and species of the same genus or of related genera, which, from having nearly the same structure, constitution, and habits, generally come into the severest competition with each other. Consequently, each new variety or species, during the progress of its formation, will generally press hardest on its nearest kindred, and tend to exterminate them."

CHARLES DARWIN, The Origin of Species / Natural Selection / Extinction

∆ A representation of a mammalian neocortical column, the basic building block of the cortex. The representation shows the complexity of this part of the brain, which has now been modeled using a supercomputer. By demonstrating that their simulation is realistic, the researchers say, these results suggest that an entire mammal brain could be completely modeled within three years, and a human brain within the next decade.

...

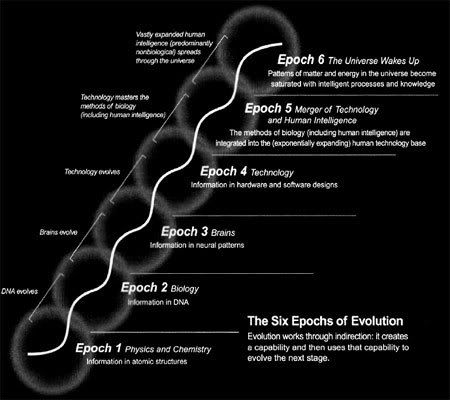

THE SIX EPOCHS:

(IN THE EVOLUTION OF PATTERNS OF INFORMATION)

(from Singularity is Near, Ray Kurzweil, 2005)

"First we build the tools, then they build us."

—MARSHALL MCLUHAN

"The future ain't what it used to be."

—YOGI BERRA

"You know, things are going to be really different! ... No, no, I mean really different!"

—MARK MILLER (COMPUTER SCIENTIST) TO ERIC DREXLER, AROUND 1986

"What are the consequences of this event? When greater-than-human intelligence drives progress, that progress will be much more rapid. In fact, there seems no reason why progress itself would not involve the creation of still more intelligent entities—on a still-shorter time scale. The best analogy that I see is with the evolutionary past: Animals can adapt to problems and make inventions, but often no faster than natural selection can do its work—the world acts as its own simulator in the case of natural selection. We humans have the ability to internalize the world and conduct "what if's" in our heads; we can solve many problems thousands of times faster than natural selection. Now, by creating the means to execute those simulations at much higher speeds, we are entering a regime as radically different from our human past as we humans are from the lower animals. From the human point of view, this change will be a throwing away of all the previous rules, perhaps in the blink of an eye, an exponential runaway beyond any hope of control."

—VERNOR VINGE, "THE TECHNOLOGICAL SINGULARITY," 1993

"Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an "intelligence explosion," and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make."

—IRVING JOHN GOOD, "SPECULATIONS CONCERNING THE FIRST ULTRAINTELLIGENT MACHINE," 1965

Epoch One: Physics and Chemistry.

We can trace our origins to a state that represents information in its basic structures: patterns of matter and energy. Recent theories of quantum gravity hold that time and space are broken down into discrete quanta, essentially fragments of information. There is controversy as to whether matter and energy are ultimately digital or analog in nature, but regardless of the resolution of this issue, we do know that atomic structures store and represent discrete information.

A few hundred thousand years after the Big Bang, atoms began to form, as electrons became trapped in orbits around nuclei consisting of protons and neutrons. The electrical structure of atoms made them "sticky." Chemistry was born a few million years later as atoms came together to create relatively stable structures called molecules. Of all the elements, carbon proved to be the most versatile; it's able to form bonds in four directions (versus one to three for most other elements), giving rise to complicated, information-rich, three-dimensional structures. The rules of our universe and the balance of the physical constants that govern the interaction of basic forces are so exquisitely, delicately, and exactly appropriate for the codification and evolution of information (resulting in increasing complexity) that one wonders how such an extraordinarily unlikely situation came about.

Where some see a divine hand, others see our own hands—namely, the anthropic principle, which holds that only in a universe that allowed our own evolution would we be here to ask such questions. Recent theories of physics concerning multiple universes speculate that new universes are created on a regular basis, each with its own unique rules, but that most of these either die out quickly or else continue without the evolution of any interesting patterns (such as Earth-based biology has created) because their rules do not support the evolution of increasingly complex forms. It's hard to imagine how we could test these theories of evolution applied to early cosmology, but it's clear that the physical laws of our universe are precisely what they need to be to allow for the evolution of increasing levels of order and complexity.

Epoch Two: Biology and DNA.

In the second epoch, starting several billion years ago, carbon-based compounds became more and more intricate until complex aggregations of molecules formed selfreplicating mechanisms, and life originated. Ultimately, biological systems evolved a precise digital mechanism (DNA) to store information describing a larger society of molecules. This molecule and its supporting machinery of codons and ribosomes enabled a record to be kept of the evolutionary experiments of this second epoch.

Epoch Three: Brains.

Each epoch continues the evolution of information through a paradigm shift to a further level of "indirection." (That is, evolution uses the results of one epoch to create the next.) For example, in the third epoch, DNA-guided evolution produced organisms that could detect information with their own sensory organs and process and store that information in their own brains and nervous systems. These were made possible by second-epoch mechanisms (DNA and epigenetic information of proteins and RNA fragments that control gene expression), which (indirectly) enabled and defined third-epoch information-processing mechanisms (the brains and nervous systems of organisms).

The third epoch started with the ability of early animals to recognize patterns, which still accounts for the vast majority of the activity in our brains.10 Ultimately, our own species evolved the ability to create abstract mental models of the world we experience and to contemplate the rational implications of these models. We have the ability to redesign the world in our own minds and to put these ideas into action.

Epoch Four: Technology.

Combining the endowment of rational and abstract thought with our opposable thumb, our species ushered in the fourth epoch and the next level of indirection: the evolution of humancreated technology. This started out with simple mechanisms and developed into elaborate automata (automated mechanical machines). Ultimately, with sophisticated computational and communication devices, technology was itself capable of sensing, storing, and evaluating elaborate patterns of information. To compare the rate of progress of the biological evolution of intelligence to that of technological evolution, consider that the most advanced mammals have added about one cubic inch of brain matter every hundred thousand years, whereas we are roughly doubling the computational capacity of computers every year (see the next chapter). Of course, neither brain size nor computer capacity is the sole determinant of intelligence, but they do represent enabling factors. If we place key milestones of both biological evolution and human technological development on a single graph plotting both the x-axis (number of years ago) and the y-axis (the paradigm-shift time) on logarithmic scales, we find a reasonably straight line (continual acceleration), with biological evolution leading directly to human-directed development.

Epoch Five: The Merger of Human Technology with Human Intelligence.

Looking ahead several decades, the Singularity will begin with the fifth epoch. It will result from the merger of the vast knowledge embedded in our own brains with the vastly greater capacity, speed, and knowledge-sharing ability of our technology. The fifth epoch will enable our human-machine civilization to transcend the human brain's limitations of a mere hundred trillion extremely slow connections.

The Singularity will allow us to overcome age-old human problems and vastly amplify human creativity. We will preserve and enhance the intelligence that evolution has bestowed on us while overcoming the profound limitations of biological evolution. But the Singularity will also amplify the ability to act on our destructive inclinations, so its full story has not yet been written.

Epoch Six: The Universe Wakes Up.

In the aftermath of the Singularity, intelligence, derived from its biological origins in human brains and its technological origins in human ingenuity, will begin to saturate the matter and energy in its midst. It will achieve this by reorganizing matter and energy to provide an optimal level of computation to spread out from its origin on Earth.

We currently understand the speed of light as a bounding factor on the transfer of information. Circumventing this limit has to be regarded as highly speculative, but there are hints that this constraint may be able to be superseded. If there are even subtle deviations, we will ultimately harness this superluminal ability. Whether our civilization infuses the rest of the universe with its creativity and intelligence quickly or slowly depends on its immutability. In any event the "dumb" matter and mechanisms of the universe will be transformed into exquisitely sublime forms of intelligence, which will constitute the sixth epoch in the evolution of patterns of information.

This is the ultimate destiny of the Singularity and of the universe.

…

There are some laws that comes to mind:

Dollo's Law:

"Evolution is not reversible"

Amara's law:

"We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run".

Hanlon's razor:

"Never attribute to malice that which can be adequately explained by stupidity."

Wirth's law:

"Software gets slower faster than hardware gets faster."

Sturgeon's Revelation:

"Ninety percent of everything is crap."

&

Murphy's (Finagle's) Law:

"if anything can go wrong, it will", less commonly "If it can happen, it will happen"

Labels: GNR, Paradigm Shift, Technological Singularity